This post is a combination of what was actually said at the conference, what is going on in the industry related to conferences and organizations, and possibly the loudest part, what was not said. We’ll take those 3 things and expand upon them in this blog post.

Thing 1: What Was Said at the Conference #

There were a few announcements and talks about manufacturing capacity from Samsung related to NAND. Namely that Samsung is not exiting the NAND market, but it is not a key area of focus for growth, that goes to HBM. It’s relatively safe to assume that the nods from the competitors in the crowd make this a unanimous sentiment amongst the DRAM+NAND manufacturing contingent of Samsung, SK Hynix, and Micron.

NAND is seen as an enabler of some interesting experimental architectures. For example, Samsung introduced a hybrid Type 3 CXL device that has both DRAM and NAND backing it as a persistent store that you address as a single module.1 This device has existed in one form or another since FMS of 2022, so it’s not necessarily a “new” announcement by Samsung, but it is one that is maturing, which is new for CXL. When you look at how the market positioning for such a device has changed, it’s gotten closer and closer to a CXL attached replacement for NVDIMM or Optane2. This year at memcon it was put forward as a device that has smart memory tiering baked in, and functions like any other PMEM. Effectively, the NAND is now a means for powerfail and a capacity boost behind the scenes, because end-users want to interact at a 64 byte addressable interface, which NAND is ineffective at in real world use-cases.

There were a few mentions of NAND here and there from some of the analysts during their panel, effectively amounting to the DRAM and HBM demand explosion will eventually trickle it’s way down to storage, and high density low cost NAND in particular some time late in calendar 2024 or early in 2025. But this will be mainly due to the need to store new data acquired to train the models, not necessarily due to the need to store the output of the models or the models themselves which are relatively small post training.

Thing 2: The Conference Circuit and Industry Organizations #

For those that are in the industry, this may be obvious, but for those who are watching from the end-user perspective this is something you should be made aware of and keep an eye on. Like Memcon, there is a conference that traditionally focused on Flash memory (NAND mostly), and it was called pretty obviously Flash Memory Summit or FMS for short3. However just recently FMS has gone through some rebranding. When you say “FMS” or hear about this, it no longer means Flash Memory Summit, it means Future of Memory and Storage conference. A pretty huge and distinct change, specifically pivoting away from “flash memory” for a broader industry engagement. There’s still some artifacts there from the rebranding4, but it was obvious last year that the conference had to change to stay relevant. There just isn’t that much going on in the world of storage or NAND development at this point. Bigger SSD’s, More Layers, bla bla bla - it’s become more of the same with mostly iterative changes and no fresh innovations driving what’s actually possible, cries in hard drive.

In the same vein as the FMS rebrand,

SNIA is no longer a STORAGE based organization. They have completely pivoted and rebranded to a DATA organization. This is again nuanced in it’s approach, but a very distinct departure from what it was just a short year ago. When you look at the new focus of Data Experts and see what that is comprised of, it becomes clear that Storing Data is only a small piece of what is going to be focused on in the future.

Thing 3: What Was Not Said at the Conference #

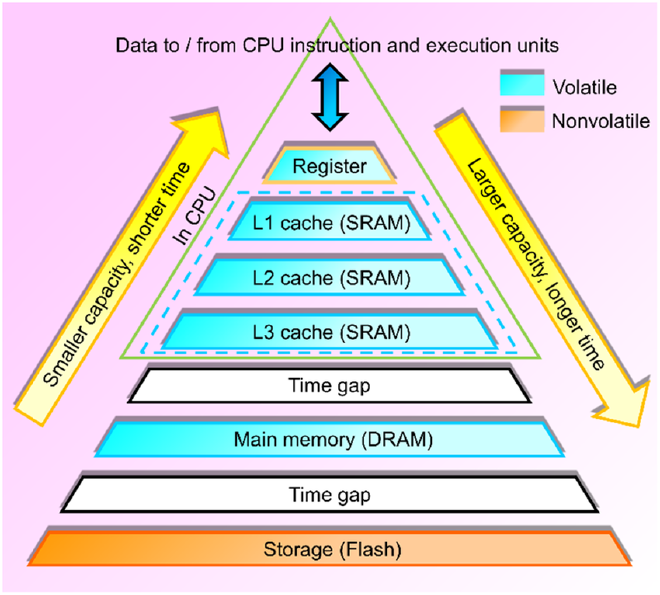

There was not a single mention of a new usecase for Flash Memory, no new discussion of form factors, no discussion about SLC based memory solutions to bridge the gap from PMEM to NAND as a really high performance tier of memory. Nothing. The silence amongst the obvious players there who have a heavy hand in making SLC cache like devices was strange to say the least, but also makes plenty of sense if they see memory expansion as the new tier that will replace the tier that Optane was playing in with some cleverness like the CMM-H device. I have to believe there is also plenty that cannot be said by any of these players who are clearly looking to create a new type of memory that fits between DRAM and NAND like Optane did. There’s plenty of talk around MRAM, PMEM, etc. Optane went away, but it wasn’t just marketing smoke and mirrors that created the now notorious memory pyramid.

It seems that NAND (or Flash as it is most commonly referred to as in the memory and tech industry) has finally found it’s place next to the hard drive at the bottom of the pyramid. Tape is not allowed to enter the chat.

-

https://semiconductor.samsung.com/us/news-events/tech-blog/samsung-cxl-solutions-cmm-h/ ↩︎

-

Where it’s earlier iterations were a pure hybrid type model where it was addressable in a mode as discrete nand and discrete DRAM or a battery backed addressable Persistent DRAM module on powerfail. ↩︎

-

It’s been a while since I’ve heard someone refer to it in passing like “Are you going to Flash Memory Summit this year?”, they just ask if you are going to FMS. ↩︎

-

like their URL being https://flashmemorysummit.com with future of memory and storage branding until just recently haha, they’ll get there. ↩︎