The 2023 year has brought exciting advancements that have elevated Compute Express Link (CXL) from a promising technology to a capable products ecosystem. With the products now available in the market, engineering teams and developers alike can now evaluate the gains that can be achieved by extending system memory beyond the constraints of the DDR bus.

With the sizable number of contributing companies and products now available, it is hard to imagine that the initial CXL 1.0 specification was released only four years ago in March 2019. In the time that has transpired, we have seen the release of the 1.1, 2.0, 3.0 specifications. And just this past week at the Super Computing 2023 conference, we have seen the release of the 3.1 specification with needed updates in Fabric Management and shared memory security.

Looking back at what has transpired this year, we can see some significant milestones. At the start of 2023, we saw the availability of CPUs, from multiple vendors, that supported the CXL 1.1 specification. These CPUs along with CXL Memory Controllers complete the minimum set of components needed to build a compute environment with memory that flows beyond the CPU. With these two components, we can build computing platforms with greater memory capacity and performance than has ever been possible.

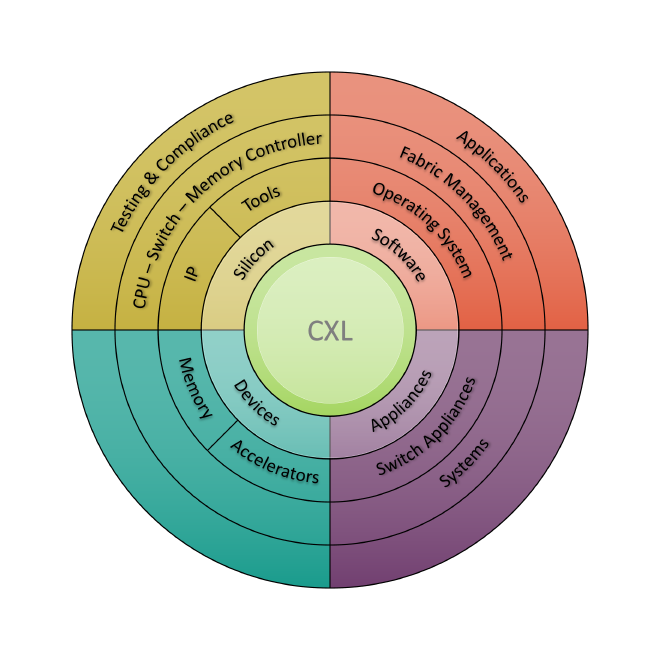

Putting the excitement of this technology aside, to understand where we are at the in development of a functional CXL ecosystem we first need to break it down into a few cohesive segments. The current CXL technology ecosystem can be divided roughly into four market segments: Silicon, Devices, Software, and Appliances. Let’s take a look at each segment.

Silicon #

The first segment, Silicon, consists of the supply chain needed to architect, design, and manufacture CXL compliant silicon components. From the initial release of the CXL 1.0 specification over four years ago, the Silicon segment of the market has seen more activity than the other segments. This follows the natural flow of development. Silicon generally begins with the development of IP in the form of cores, state machines, phys, and best practices that make CXL functionality a possibility. Multiple vendors of IP in the market from the well-established Rambus & Synopsys to new entrants such as Panmnesia have supplied silicon designers the building blocks needed to accelerate the time to market of a functional ASIC.

Outside of IP providers, design tool vendors such as Cadence and others have integrated into their design process the steps and processes to enable the integration of CXL components. And once the silicon is complete, there are multiple vendors of test equipment such as protocol analyzers from Teledyne Lecroy or Viavi & compliance verification tools to ensure proper operation or assistance in debugging if there is anything amiss. All of these providers can be considered services providers who support the development and verification of silicon-based products.

The expertise and support of all these services companies has brought the release of the following silicon products in 2023: • CPUs from AMD & Intel, • FPGAs from AMD & Intel, • Memory Expander Controllers from Astera Labs, Microchip, and Montage Technology, • Retimers from Astera Labs, Microchip and Broadcom, • and CXL 2.0 compliant switches from X-Conn Technologies.

With the availability of these components, we now have all the pieces to build the other segments of the CXL ecosystem such as Devices and Appliances. This of course doesn’t imply that we have reached the end of silicon innovation. Most of these silicon components are compliant to the CXL 1.1 specification which would have been the only spec completed at the time of their design and tape out. We eagerly await the upcoming release of CXL 2.0 compliant CPUs in the near future that will greater enable the pooling of shared memory.

Devices #

The device segment of the CXL market consists of Memory Devices that implement the CXL Type-3 class, and Accelerators that implement the CXL Type-1/2 classes. Memory devices can be hot swappable devices such as those in an E3.S form factor, an AIC form factor that is placed in traditional PCIe slots within a server with classical DDR based memory DIMMs, or even a sled form factor that can perhaps even be shared with multiple sockets or servers.

To date, the market has focused on DRAM based Memory Devices. Other types of memory can be supported with CXL, such as ReRAM or Memristor technology, but traditional DRAM has been the primary focus of the industry thus far. At the end of the 2023 year, we now have CXL based DRAM devices from all the major DRAM providers such as SK hynix, Micron and Samsung.

Only a few Accelerator type devices that implement the Type-1 or Type-2 protocols have been demonstrated at this point. These have mainly been storage devices (SSDs) that also have a CXL.cache host interface in place of traditional NVMe. Since these Accelerator devices are not pure memory devices that use the CXL.mem protocol, they require software changes on the host to use them. The performance advantage they offer is a real opportunity, but those advantages are slow to be realized as existing software is often slow to pivot to utilize new hardware capabilities.

While we have not seen them yet, we do expect CXL.cache based network cards in the future. These will enable lower latency network transactions as host applications will be able to bypass the kernel and directly communicate with the Network Card controller.

Software #

While CXL is fundamentally a cache-coherent hardware interconnect technology, software is still needed to enable it. The software for CXL can be categorized into Operating System integration, Applications, and Management / Orchestration.

Since the publication of the CXL specification, Operating System support for CXL devices has been continually ongoing. Most of the discussions in the ecosystem have been about the changes needed in open-source operating systems such as Linux to support the use of ‘far memory’. Today, a modern Linux kernel can support DRAM based memory devices attached using the CXL.mem protocol. The additional memory is presented to the operating system as an additional NUMA node that only has memory and no compute cores.

Once attached to the operating system, the far memory can be configured generally in one of two ways. It can be used as a separate tier of slower memory or interleaved with CPU memory. The choice of which to use depends on what would best suit the target application. When used as a slower tier of memory, the operating system can transparently tier hot and cold pages between ‘fast’ CPU-attached memory and ‘not-as-fast’ CXL-attached. This enables an application to expand memory capacity beyond what is possible when limited to what can be attached to a CPU without sacrificing (much) performance as the hot pages will (hopefully) be maintained in CPU attached memory.

The option is to configure the far memory to be interleaved with CPU attached memory. This is used when the application would benefit from greater memory bandwidth. An example of this would be an application such as an OLAP database that regularly streams a large data set from memory through the CPU to compute analytics. These workloads are not latency sensitive and benefit from the increased bandwidth beyond what can be achieved with CPU attached memory channels.

Enabling these use cases is memory orchestration. This area of the software segment is still emerging and is a little behind operating support of CXL. For simple use cases of memory expansion, the memory configuration can be statically configured with little user effort. As we progress beyond memory expansion to memory pooling, there will be a need to dynamically orchestrate memory. Software integration of CXL Fabric Management with existing application orchestration applications such as Kubernetes or VMware will offer the ability to securely provision pooled memory through the same tools used to deploy applications today.

Appliances #

The final segment of the CXL ecosystem is shared memory appliances that integrate memory controllers, switches, and software. There are multiple memory appliance vendors on the market today such as IntelliProp and Unifabrix with products that generally consist of a 2U rack mount 19” enclosure with multiple CDFP ports & cables that are used to connect to an FPGA based Add-in-Card in a client. In this architecture, the FPGA based adapter card in the client presents a CXL 1.1 compliant interface to the host CPU, which as of today only support the 1.1 specification. This enables today’s CPUs to access pooled or shared memory in addition to simple memory expansion. The internals of these appliances are proprietary, but if they are based on FPGA technology, the products have the potential to be updated to newer versions of the CXL specification as they become available.

How to get involved #

With all this recent activity, now is a great time to get up to date and involved with CXL technology. Multiple forums are available where discussion of the technology takes place in an open environment, and anyone can join. For example, the Open Compute Project (OCP) has a working group for CXL named “Composable Memory Systems” that (as of late 2023) meets each week on Friday. The schedule can be found here and the past recordings are posted on YouTube here. The OCP Summit conference held each year in October has also become the common gathering place for the members of the ecosystem. Those sessions are also posted freely to YouTube. OCP 2022 and 2023 CXL sessions can be found here and here.